Metal 'muscles'Autonomous robots, micro-scale air vehicles, and prosthetic limbs are all supposed to operate for long periods without recharging or refuelling, making efficient energy supply crucial.

Metal 'muscles'Autonomous robots, micro-scale air vehicles, and prosthetic limbs are all supposed to operate for long periods without recharging or refuelling, making efficient energy supply crucial.

Nature's choice is to provide chemical power for natural actuators like muscles. Human engineers have typically taken another route, relying on converting electrical energy into mechanical energy using motors, hydraulic systems, or piezoelectric actuators.

This is much less efficient, meaning even the most athletically capable robot must be wired to a stationary power source for much of the time.

The ideal solution is an artificial muscle that can convert chemical energy directly and efficiently into mechanical energy, says Ray Baughman a physicist at the NanoTech Institute at the University of Texas in Dallas, US.

Baughman says he has built such a device made of a "shape memory" alloy of nickel and titanium. The metal is coated with a platinum catalyst and placed in a device that allows methanol to be drawn along the surface.

Exposing the surface to air causes the methanol to be oxidised, which heats the alloy and makes it bend in a pre-determined way. Cutting off the methanol supply lets the alloy cool and causes the alloy to its original shape.

Baughman says the device can generate stresses 500 times greater than human muscle and believes further significant improvements should be possible.

Read the full metal muscle patent application

'Circuit-board' clothing"The integration of electronic components into clothing is becoming an increasingly important area in the field known as wearable computing," says the electronics giant Philips in one of its latest patent filings.

The textile industry can today produce threads that are highly conductive and as flexible as regular fabric so that sensors in garments can measure biometric characteristics, like the wearer's temperature or heart rate. Such garments could have important applications in medicine and sports.

The trouble is that weaving sensors into these textiles is tricky. Threads not only need to be highly durable to survive the weaving process but, because fabrics are woven on a large scale and then cut to size, it is hard to ensure they will end up where they are needed in the garment. This fabrication method also limits the choice of sensors that can be used.

So Philips is pioneering another approach. This involves making a fabric that acts like a flexible circuit board to which any variety of sensors can then be attached. The company suggests weaving a fabric out of both conducting and non-conducting fibres, and then cutting the garment to size.

Sensors can be pinned to the garment where they are needed, with the conducting wire providing power but also communications between different sensors. The resulting fabric can easily be tailored to any size and can carry a variety of sensors for monitoring the wearer's body.

Read the full sensor fabric patent application.

Laser near-vision restorationThe long sightedness that afflicts people in their 40s and 50s is known as presbyopia, and is caused by a gradual stiffening of the lens in the eye. This is the result of the continual generation of new cells on top of older ones on the lens, a process that makes it stiffer and thicker.

In practice, this inflexibility prevents people focusing on near objects and means they must rely on reading glasses instead.

Now Szymon Suckewer, a mechanical engineer and laser specialist at Princeton University, New Jersey, US, says it may be possible to remove excess cells in the lens by using short laser pulses to vapourise them.

The process is entirely different from current laser treatments for vision, which change the shape of the cornea. Instead, it should make the lens fully flexible again, giving sufferers back their near vision.

However, Suckewer makes no mention in his a patent application of any tests involving animal or human subjects. So best wait and see how well the idea works before throwing those reading glasses away.

Search This Blog

Wednesday, October 31, 2007

Invention : Metal 'muscles' Autonomous robots,

Invention : Metal 'muscles' Autonomous robots,

Metal 'muscles'Autonomous robots, micro-scale air vehicles, and prosthetic limbs are all supposed to operate for long periods without recharging or refuelling, making efficient energy supply crucial.

Metal 'muscles'Autonomous robots, micro-scale air vehicles, and prosthetic limbs are all supposed to operate for long periods without recharging or refuelling, making efficient energy supply crucial.

Nature's choice is to provide chemical power for natural actuators like muscles. Human engineers have typically taken another route, relying on converting electrical energy into mechanical energy using motors, hydraulic systems, or piezoelectric actuators.

This is much less efficient, meaning even the most athletically capable robot must be wired to a stationary power source for much of the time.

The ideal solution is an artificial muscle that can convert chemical energy directly and efficiently into mechanical energy, says Ray Baughman a physicist at the NanoTech Institute at the University of Texas in Dallas, US.

Baughman says he has built such a device made of a "shape memory" alloy of nickel and titanium. The metal is coated with a platinum catalyst and placed in a device that allows methanol to be drawn along the surface.

Exposing the surface to air causes the methanol to be oxidised, which heats the alloy and makes it bend in a pre-determined way. Cutting off the methanol supply lets the alloy cool and causes the alloy to its original shape.

Baughman says the device can generate stresses 500 times greater than human muscle and believes further significant improvements should be possible.

Read the full metal muscle patent application

'Circuit-board' clothing"The integration of electronic components into clothing is becoming an increasingly important area in the field known as wearable computing," says the electronics giant Philips in one of its latest patent filings.

The textile industry can today produce threads that are highly conductive and as flexible as regular fabric so that sensors in garments can measure biometric characteristics, like the wearer's temperature or heart rate. Such garments could have important applications in medicine and sports.

The trouble is that weaving sensors into these textiles is tricky. Threads not only need to be highly durable to survive the weaving process but, because fabrics are woven on a large scale and then cut to size, it is hard to ensure they will end up where they are needed in the garment. This fabrication method also limits the choice of sensors that can be used.

So Philips is pioneering another approach. This involves making a fabric that acts like a flexible circuit board to which any variety of sensors can then be attached. The company suggests weaving a fabric out of both conducting and non-conducting fibres, and then cutting the garment to size.

Sensors can be pinned to the garment where they are needed, with the conducting wire providing power but also communications between different sensors. The resulting fabric can easily be tailored to any size and can carry a variety of sensors for monitoring the wearer's body.

Read the full sensor fabric patent application.

Laser near-vision restorationThe long sightedness that afflicts people in their 40s and 50s is known as presbyopia, and is caused by a gradual stiffening of the lens in the eye. This is the result of the continual generation of new cells on top of older ones on the lens, a process that makes it stiffer and thicker.

In practice, this inflexibility prevents people focusing on near objects and means they must rely on reading glasses instead.

Now Szymon Suckewer, a mechanical engineer and laser specialist at Princeton University, New Jersey, US, says it may be possible to remove excess cells in the lens by using short laser pulses to vapourise them.

The process is entirely different from current laser treatments for vision, which change the shape of the cornea. Instead, it should make the lens fully flexible again, giving sufferers back their near vision.

However, Suckewer makes no mention in his a patent application of any tests involving animal or human subjects. So best wait and see how well the idea works before throwing those reading glasses away.

Invention: Metal muscles

Metal 'muscles'Autonomous robots, micro-scale air vehicles, and prosthetic limbs are all supposed to operate for long periods without recharging or refuelling, making efficient energy supply crucial.

Nature's choice is to provide chemical power for natural actuators like muscles. Human engineers have typically taken another route, relying on converting electrical energy into mechanical energy using motors, hydraulic systems, or piezoelectric actuators.

This is much less efficient, meaning even the most athletically capable robot must be wired to a stationary power source for much of the time.

The ideal solution is an artificial muscle that can convert chemical energy directly and efficiently into mechanical energy, says Ray Baughman a physicist at the NanoTech Institute at the University of Texas in Dallas, US.

Baughman says he has built such a device made of a "shape memory" alloy of nickel and titanium. The metal is coated with a platinum catalyst and placed in a device that allows methanol to be drawn along the surface.

Exposing the surface to air causes the methanol to be oxidised, which heats the alloy and makes it bend in a pre-determined way. Cutting off the methanol supply lets the alloy cool and causes the alloy to its original shape.

Baughman says the device can generate stresses 500 times greater than human muscle and believes further significant improvements should be possible.

Read the full metal muscle patent application

'Circuit-board' clothing"The integration of electronic components into clothing is becoming an increasingly important area in the field known as wearable computing," says the electronics giant Philips in one of its latest patent filings.

The textile industry can today produce threads that are highly conductive and as flexible as regular fabric so that sensors in garments can measure biometric characteristics, like the wearer's temperature or heart rate. Such garments could have important applications in medicine and sports.

The trouble is that weaving sensors into these textiles is tricky. Threads not only need to be highly durable to survive the weaving process but, because fabrics are woven on a large scale and then cut to size, it is hard to ensure they will end up where they are needed in the garment. This fabrication method also limits the choice of sensors that can be used.

So Philips is pioneering another approach. This involves making a fabric that acts like a flexible circuit board to which any variety of sensors can then be attached. The company suggests weaving a fabric out of both conducting and non-conducting fibres, and then cutting the garment to size.

Sensors can be pinned to the garment where they are needed, with the conducting wire providing power but also communications between different sensors. The resulting fabric can easily be tailored to any size and can carry a variety of sensors for monitoring the wearer's body.

Read the full sensor fabric patent application.

Laser near-vision restorationThe long sightedness that afflicts people in their 40s and 50s is known as presbyopia, and is caused by a gradual stiffening of the lens in the eye. This is the result of the continual generation of new cells on top of older ones on the lens, a process that makes it stiffer and thicker.

In practice, this inflexibility prevents people focusing on near objects and means they must rely on reading glasses instead.

Now Szymon Suckewer, a mechanical engineer and laser specialist at Princeton University, New Jersey, US, says it may be possible to remove excess cells in the lens by using short laser pulses to vapourise them.

The process is entirely different from current laser treatments for vision, which change the shape of the cornea. Instead, it should make the lens fully flexible again, giving sufferers back their near vision.

However, Suckewer makes no mention in his a patent application of any tests involving animal or human subjects. So best wait and see how well the idea works before throwing those reading glasses away.

Technorati : Invention:, Metal, muscles

Massive Black Hole Smashes

Using two NASA satellites, astronomers have discovered the heftiest known black hole to orbit a star. The new black hole, with a mass 24 to 33 times that of our Sun, is more massive than scientists expected for a black hole that formed from a dying star.

The newly discovered object belongs to the category of "stellar-mass" black holes. Formed in the death throes of massive stars, they are smaller than the monster black holes found in galactic cores. The previous record holder for largest stellar-mass black hole is a 16-solar-mass black hole in the galaxy M33, announced on October 17.

"We weren't expecting to find a stellar-mass black hole this massive," says Andrea Prestwich of the Harvard-Smithsonian Center for Astrophysics in Cambridge, Mass., lead author of the discovery paper in the November 1 Astrophysical Journal Letters. "It seems likely that black holes that form from dying stars can be much larger than we had realized."

The black hole is located in the nearby dwarf galaxy IC 10, 1.8 million light-years from Earth in the constellation Cassiopeia. Prestwich's team could measure the black hole's mass because it has an orbiting companion: a hot, highly evolved star. The star is ejecting gas in the form of a wind. Some of this material spirals toward the black hole, heats up, and gives off powerful X-rays before crossing the point of no return.

In November 2006, Prestwich and her colleagues observed the dwarf galaxy with NASA's Chandra X-ray Observatory. The group discovered that the galaxy's brightest X-ray source, IC 10 X-1, exhibits sharp changes in X-ray brightness. Such behavior suggests a star periodically passing in front of a companion black hole and blocking the X-rays, creating an eclipse. In late November, NASA's Swift satellite confirmed the eclipses and revealed details about the star's orbit. The star in IC 10 X-1 appears to orbit in a plane that lies nearly edge-on to Earth's line of sight, The Swift observations, as well as observations from the Gemini Telescope in Hawaii, told Prestwich and her group how fast the two stars go around each other. Calculations showed that the companion black hole has a mass of at least 24 Suns.

There are still some uncertainties in the black hole's mass estimate, but as Prestwich notes, "Future optical observations will provide a final check. Any refinements in the IC 10 X-1 measurement are likely to increase the black hole's mass rather than reduce it."

The black hole's large mass is surprising because massive stars generate powerful winds that blow off a large fraction of the star's mass before it explodes. Calculations suggest massive stars in our galaxy leave behind black holes no heavier than about 15 to 20 Suns.

The IC 10 X-1 black hole has gained mass since its birth by gobbling up gas from its companion star, but the rate is so slow that the black hole would have gained no more than 1 or 2 solar masses. "This black hole was born fat; it didn't grow fat," says astrophysicist Richard Mushotzky of NASA Goddard Space Flight Center in Greenbelt, Md., who is not a member of the discovery team.

The progenitor star probably started its life with 60 or more solar masses. Like its host galaxy, it was probably deficient in elements heavier than hydrogen and helium. In massive, luminous stars with a high fraction of heavy elements, the extra electrons of elements such as carbon and oxygen "feel" the outward pressure of light and are thus more susceptible to being swept away in stellar winds. But with its low fraction of heavy elements, the IC 10 X-1 progenitor shed comparatively little mass before it exploded, so it could leave behind a heavier black hole.

"Massive stars in our galaxy today are probably not producing very heavy stellar-mass black holes like this one," says coauthor Roy Kilgard of Wesleyan University in Middletown, Conn. "But there could be millions of heavy stellar-mass black holes lurking out there that were produced early in the Milky Way's history, before it had a chance to build up heavy elements."

A New Video Game with Expressive Characters Lead to Emotional Attachment

When one thinks of the leaders in computer animation, a few familiar film studios come to mind, namely DreamWorks and Pixar. Traditional movie companies like Fox and Warner Brothers have also made successful forays into animated fare.

When one thinks of the leaders in computer animation, a few familiar film studios come to mind, namely DreamWorks and Pixar. Traditional movie companies like Fox and Warner Brothers have also made successful forays into animated fare.

Video games, however, have rarely been known for the artistry of their animation, for two reasons. First, while they almost inevitably fall short, most video games of any genre try to look realistic - realistic cars, realistic football players, even plausibly "realistic" dragons and aliens. But what defines great animated characters like Mickey Mouse and Shrek is not any notion of realism, but rather an exaggerated yet unthreatening expressiveness. That is one reason so few of the classic animated characters are human: anthropomorphized animals give the artist much more creative leeway.

Just as important, in those cases when designers have gone for an animated look, technology has simply not allowed games to come anywhere near the fluidity, detail and depth possible in a noninteractive film. Not to mention that game companies often undermine even brilliant graphics with lackluster voice acting.

All that is changing this week with the release of Insomniac Games' Ratchet & Clank Future: Tools of Destruction for Sony's PlayStation 3 console. The new Ratchet is a watershed for gaming because it provides the first interactive entertainment experience that truly feels like inhabiting a world-class animated film.

Ratchet is expressive and chock- full of family-friendly visual humor, delivered with a polish and sophistication that have previously been the exclusive province of movies like "Toy Story" and "Monsters, Inc." (The game is being released with a rating of E 10+, which means it has been deemed appropriate for all but the youngest children.)

In addition to its creative achievement, Ratchet may also prove important in the incessant turf battle that is the multibillion-dollar video game business. Put baldly, Ratchet is the best game yet for the PlayStation 3, which has lagged behind Microsoft's Xbox 360 and Nintendo's Wii systems in the current generation of console wars. The PlayStation 3 has more silicon horsepower under the hood than either the 360 or the Wii, but until now there has not been a game for it that seemed to put all that muscle to use.

Ratchet, however, will surely become a showcase game for Sony because, given current technology, it seems unlikely that any game for either the 360 or the Wii will be able to match Ratchet's overall visual quality.

So how did Insomniac do it, and who is this Ratchet anyway?

In the best tradition of animation, Ratchet is a mischievous yet good-hearted member of the not-quite-human, not-quite-animal Lombax species. He is covered in yellow and orange fur, and he has a tail and long, floppy ears. Clank is his sage robot sidekick, and together they traverse the galaxy defeating the forces of evil. The first Ratchet game was released in 2002; since then the series has sold more than 12 million copies. As the new game begins, the once-idyllic city of Metropolis has come under attack by the legions of the big bad guy Emperor Tachyon.

"Ratchet has always been our opportunity to push the lighter side of gaming," said Ted Price, Insomniac's chief executive and founder, in a recent interview here. "Ratchet has allowed us to make jokes on those two pop culture levels where if the kids don't get it, the parents will.

"For instance, the character Courtney Gears was a big feature in the earlier game Ratchet Up Your Arsenal. Actually, a lot of us are huge Pixar fans, and we're inspired by their work because those guys do a great job of telling stories, and we think an area where we can drive games forward is not just in the visuals but in the area of character development." (For a kick, check out clips of Courtney Gears on YouTube.)

In a conference room dominated by an immense flat-panel screen, Brian Allgeier, the creative director of the new game, explained how new technology can help create a more engaging game experience. "The Ratchet character now has 90 joints just in his face," he said, referring to the character's virtual wrinkles and bones. "On the PlayStation 2, he had 112 joints in his whole body. So now we have a lot more power to make the characters more believable and expressive."

"Ultimately we're trying to create more emotional intimacy," he said. "That's one of the things that movies do really well with the close-up, where you can really see the emotions on the character's face. But in games, so often you're just seeing the action from a wide shot behind the character you're controlling and you don't have that emotional connection. So that's what we're going for."

Like most games, the new Ratchet includes a variety of weapons, but they tend toward whimsical gear like the Groovitron. Being pursued by a pack of bloodthirsty robot pirates? Bust out the Groovitron, a floating, spinning disco ball that compels your foes to dance to its funky beats while you make a getaway.

The biggest problem with Ratchet is that at times it is so lushly compelling that you find yourself just staring at the screen, as if it were a movie, rather than actually playing the game. And that, of course, is not a bad problem at all.

Technorati : emotional game, games, new game

Waste management :IBM to recycle silicon for solar

![]() IBM announced today that it has created a process allowing its manufacturing facilities to repurpose otherwise scrap semiconductor wafers.

IBM announced today that it has created a process allowing its manufacturing facilities to repurpose otherwise scrap semiconductor wafers.

Since the silicon wafers need to be nearly flawless to be used in computers, mobile phones, video games and other consumer electronics, the imperfect ones are normally erased with acidic chemicals and discarded. IBM had been sandblasting its flawed wafers to remove proprietary material. Some of the pieces, called "monitors," are reused for test purposes.

The new process cleans the silicon pieces with water and an abrasive pad, which leaves them in better condition for reuse. The entire process to clean an 8-in. wafer is about one minute. Eric White, the inventor of the process, said IBM can now get five or six monitor wafers out of one that would have been crushed and discarded. The cleaned wafers can also be sold to the solar-cell industry, which has a high demand for the silicon material to use in solar panels. White said that shortage would need to be "extreme" to use the wafers in consumer electronics, and that IBM does not plan to do so.

The IBM site in Burlington, Vt., has been using the process and reported an annual savings in 2006 of more than $500,000. Expansion of the technique has begun at IBM's site in East Fishkill, N.Y., and the company estimates its 2007 savings are more than $1.5 million. The Vermont and New York plants are IBM's only semiconductor manufacturing sites.

Chris Voce, an analyst at Forrester Resarch Inc., said he doesn't anticipate savings for the end user, but added, "When a semiconductor company can improve its process and drive efficiency into their manufacturing process, that's always great for their margins, but it's even better when there are broader benefits for the environment." Annually, IBM estimates that the semiconductor industry discards as many as 3 million wafers worldwide.

IBM plans to patent the new process and provide details to the semiconductor manufacturing industry.

Technorati : IBM, recycle silicon, solar

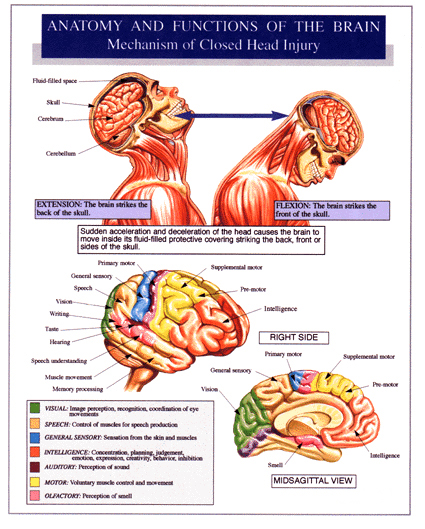

Stem cells can improve memory after brain injury

Stem cells can improve memory after brain injury

New UC Irvine research is among the first to demonstrate that neural stem cells may help to restore memory after brain damage.

In the study, mice with brain injuries experienced enhanced memory -- similar to the level found in healthy mice -- up to three months after receiving a stem cell treatment. Scientists believe the stem cells secreted proteins called neurotrophins that protected vulnerable cells from death and rescued memory. This creates hope that a drug to boost production of these proteins could be developed to restore the ability to remember in patients with neuronal loss.

"Our research provides clear evidence that stem cells can reverse memory loss," said Frank LaFerla, professor of neurobiology and behavior at UCI. "This gives us hope that stem cells someday could help restore brain function in humans suffering from a wide range of diseases and injuries that impair memory formation."

The results of the study appear Oct. 31 in the Journal of Neuroscience.

LaFerla, Mathew Blurton-Jones and Tritia Yamasaki performed their experiments using a new type of genetically engineered mouse that develops brain lesions in areas designated by the scientists. For this study, they destroyed cells in the hippocampus, an area of the brain vital to memory formation and where neurons often die.

To test memory, the researchers gave place and object recognition tests to healthy mice and mice with brain injuries. Memories of place depend upon the hippocampus, and memories of objects depend more upon the cortex. In the place test, healthy mice remembered their surroundings about 70 percent of the time, but mice with brain injuries remembered it just 40 percent of the time. In the object test, healthy mice remembered objects about 80 percent of the time, while injured mice remembered as poorly as about 65 percent of the time.

The scientists then set out to learn whether neural stem cells from a mouse could improve memory in mice with brain injuries. To test this, they injected each mouse with about 200,000 neural stem cells that were engineered to appear green under ultraviolet light. The color allows the scientists to track the stem cells inside the mouse brain after transplantation.

Three months after implanting the stem cells, the mice were tested on place recognition. The researchers found that mice with brain injuries that also received stem cells remembered their surroundings about 70 percent of the time - the same level as healthy mice. In contrast, control mice that didn't receive stem cells still had memory impairments.

Next, the scientists took a closer look at how the green-colored stem cells behaved in the mouse brain. They found that only about 4 percent of them turned into neurons, indicating the stem cells were not improving memory simply by replacing the dead brain cells. In the healthy mice, the stem cells migrated throughout the brain, but in the mice with neuronal loss, the cells congregated in the hippocampus, the area of the injury. Interestingly, mice that had been treated with stem cells had more neurons four months after the transplantation than mice that had not been treated.

"We know that very few of the cells are becoming neurons, so we think that the stem cells are instead enhancing the local brain microenvironment," Blurton-Jones said. "We have evidence suggesting that the stem cells provide support to vulnerable and injured neurons, keeping them alive and functional by making beneficial proteins called neurotrophins."

If supplemental neurotrophins are in fact at the root of memory enhancement, scientists could try to create a drug that enhances the release or production of these proteins. Scientists then could spend less time coaxing stem cells to turn into other types of cells, at least as it relates to memory research.

"Much of the focus in stem cell research has been how to turn them into different types of cells such as neurons, but maybe that is not always necessary," Yamasaki said. "In this case, we did not have to make neurons to improve memory."

UCI scientists Debbi Morrissette, Masashi Kitazawa and Salvatore Oddo also worked on this study, which was funded by the National Institute on Aging, the National Institutes of Health, and a California Institute for Regenerative Medicine postdoctoral scholar award.

The Google phone is inching closer to reality

The Google phone: Has a wireless upheaval begun?Questions remain, but analysts expect a Google phone by mid-2008

The Google phone: Has a wireless upheaval begun?Questions remain, but analysts expect a Google phone by mid-2008

The Google phone is inching closer to reality, with wireless handhelds running Google Inc. applications and operating software expected in the first half of 2008, several industry analysts said today.

Some see Google's model as revolutionary in the U.S., where nearly all customers buy their cellular phones from a wireless carrier and are locked into a contract with that carrier. But Google's entry could signal a more open system where a customer buys the Google phone and then chooses a carrier, they noted.

The Wall Street Journal today cited unnamed sources and said that Google is expected to announce software within two weeks that would run on hardware from other vendors. The Google phone is expected to be available by mid-2008. The company did not comment.

Last week at the semiannual CTIA show in San Francisco, several analysts said they had heard rumors that Google would be offering software to Taiwan-based device maker High Tech Computer Corp. (HTC) for the Google phone.

Today, Gartner Inc. analysts Phillip Redman said the rumor was still that the Google phone "is coming from HTC for next year, [with] 50,000 devices initially."

HTC could not be reached for immediate comment.

Lewis Ward, an analyst at Framingham, Mass.-based market research company IDC, said Google is clearly working on software for a phone, but after making a presentation at CTIA on emerging markets last week, he said, "It didn't sound like it was on HTC after all."

Unlike several analysts who said that Google could face a fight from carriers opposed to open networks and open devices, Ward and Redman said some carriers will cooperate with Google. "It's possible some carriers will work with Google," Ward said. "AT&T seems to be more open already with its iPhone support and other things, while T-Mobile and Sprint Nextel may be more open than Verizon Wireless."

Redman said that Google's "brand is attractive, so I think there will be takers" for building hardware and for providing network support.

At CTIA, Ward said a Google phone would make a wireless portal out of what Google already provides on a wired network to a PC, such as maps, social networking and even video sharing.

"This is about Google as a portal," Ward said last week. "This is fundamentally about wireless and wire-line converging."

Ward said Google's plans for its phone software are still up in the air. "What's unclear also is whether it will be a Linux free and open [operating system] running on top of the hardware, with applets and widgets and search and all the advanced stuff that Google has done in the past."

Jeffrey Kagan, an independent wireless analyst based in Atlanta, said many questions are raised by Google's proposition, including what the phone could be named. "Will it be a regular phone, or will it be more like the Apple iPhone? How will customers pay for it? Will it be different from the traditional way we use and pay for wireless phones? There are so many questions," Kagan said.

Like Apple Inc. with the iPhone, "Google could be very successful if they crack the code." Kagan added. "The cell phone industry ... is going through enormous change and expansion. Many ideas will be tried. Some will work, and some will fail."

Technorati : Google phone

Tuesday, October 30, 2007

New technology improves the reliability of wind turbines

The world's first commercial Brushless Doubly-Fed Generator (BDFG) is to be installed on a 20kW turbine at or close to the University of Cambridge Engineering Department's Electrical Engineering Division Building on the West Cambridge site by early 2008. This will help the University meet its obligations under new legislation, which requires a new building to obtain ten percent of its electricity from renewable sources.

The research team, led by Dr Richard McMahon, have developed a new generator technology for the wind turbine industry to the point of commercial exploitation. This type of generator can be used in a wide spectrum of wind turbines ranging from multi-megawatt systems for wind farms down to micro turbines used for domestic power generation

Research in Cambridge on this type of generator was started by Professor Williamson in the 1990s and, since 1999, is now being undertaken by Richard and his team. The team are collaborating with Durham's Head of Engineering, Professor Peter Tavner. The research has recently matured, enabling practical and complete designs to be made with confidence. Wind Technologies Ltd has recently been founded to exploit the technology.

"We are very excited about the new installation. This will be the first time BDFG is to be used commercially. The benefits to the wind power industry are clear: higher reliability, lower maintenance and lower production costs" recalls co-researcher and Managing Director of Wind Technologies, Dr Ehsan Abdi. "The West Cambridge medium size turbine should successfully demonstrate the applicability of the new generator, and we hope it will encourage the developers of other new construction projects to consider local wind-powered electricity generation to meet their obligations", adds Ehsan.

On a larger scale, a 600kW generator built by Wind Technologies is to be tested on a DeWind turbine in Germany, starting next spring. Its planned one-year test should demonstrate the improved performance of the BDFG technology to the key players in this industry. "This will put Wind Technologies in a position of strength in pursuant discussions on technology trade sale, licensing or partnering with large generator manufacturers, which is the strategy of choice for tackling this concentrated marketplace", says Ehsan.

A contemporary Brushless Doubly-Fed Machine (BDFM) is a single frame induction machine with two 3-phase stator windings of different pole numbers, and a special rotor design. Typically one stator winding is connected to the mains or grid, and hence has a fixed frequency, and the other is supplied with variable voltage at variable frequency from a converter.

In the majority (more than 90%) of newly-installed wind turbines in the world, generation is from a slip-ring generator. There are drawbacks to the use of slip-ring generators, particularly the additional cost and bulk of a machine which incorporates slip-rings and the need to maintain brush-gears including replacement of the brushes on a regular basis. Studies have shown that problems with brush-gear are a significant issue in wind turbine operation and reliability, and that the problem will be more severe in machines deployed offshore where there are stronger winds and accessibility is impaired.

The project received the Scientific Instrument Makers Award and the Cambridge University Entrepreneurs Business Idea Award in 2004. In 2005, the Institution of Electrical Engineers, now the Institution of Engineering and Technology, added its Innovation in Engineering Award. The company has recently received grants from Cambridge University Challenge Fund and East of England Development Agency to carry out market assessment, file patents and complete the pre-production prototype.

Harnessing wind power for electricity generation is becoming ever more common, both by large-scale wind farms, and increasingly by small domestic installations, with the UK the world's leading market for micro wind generation. "We hope that our generator, through offering high reliability and low maintenance, will significantly contribute to the wider adoption of wind power generation, particularly in offshore developments. This will lead to significant reductions in CO2 emission and further reduce our dependency on fossil fuels" says Ehsan.

Technorati : improves, reliability, technology, turbines, wind

String Theory's Next Top Mode

Ernest Rutherford used to tell his physics students that if they couldn't explain a concept to a barmaid, they didn't really understand the concept. With regard to the cosmological implications of string theory, the barmaids and physicists are both struggling-a predicament that SLAC string theorist Shamit Kachru hopes to soon resolve.

String theory is currently the most popular candidate for a unified theory of the fundamental forces, but it is not completely understood-and experimental evidence is notoriously elusive. Physicists can, however, gain crucial insight into the theory by evaluating how accurately its models can predict the observed universe.

Using this indirect approach, Kachru, in collaboration with theorists at Rutgers University and the Massachusetts Institute of Technology, sought models that could reproduce inflation-the prevailing cosmological paradigm in which the nascent universe experienced a fleeting period of exponential expansion.

Although there is already a substantial body of literature presenting such models-spawned in part by publications of Kachru and his Stanford and SLAC colleagues Renata Kallosh, Andrei Linde and Eva Silverstein in 2003-the complexity of the models leaves room for doubt.

"They incorporate inflation, and they're the most realistic models of string theory," Kachru said, "but they're complicated. They're fancy. They have a lot of 'moving parts,' and we need to fine-tune all of them, so we can't verify anything to a high degree of accuracy. It forces us to ask-are we confident that we really understand what's going on?"

To achieve a comprehensive understanding of how inflation can be embedded in string theory, Kachru and his collaborators employed a pedagogical tactic. "What we wanted was an explicit 'toy' model," Kachru explained. "The goal wasn't to have something realistic, but to allow us to understand everything to every detail."

"There are deep conceptual questions about how inflation is supposed to work," Kachru continued. "In order to understand these issues, it's best to have a simple model. There's so much clutter in the complicated examples, you can't disentangle the conceptual issues from the clutter."

The group investigated three versions of the simplest formulation of string theory, and found that they were incompatible with inflation. "This means we're going to have to consider slightly more complicated scenarios," said Kachru. "There are a lot of levels between this and the fancier working models, so we'll find one eventually."

"There are deep conceptual questions about how inflation is supposed to work," Kachru continued. "In order to understand these issues, it's best to have a simple model. There's so much clutter in the complicated examples, you can't disentangle the conceptual issues from the clutter."

The group investigated three versions of the simplest formulation of string theory, and found that they were incompatible with inflation. "This means we're going to have to consider slightly more complicated scenarios," said Kachru. "There are a lot of levels between this and the fancier working models, so we'll find one eventually."

Kachru and his colleagues published their work in Physical Review Letters D, providing a framework for others in search of simple inflationary models of string theory. "There are so many successful models out there that incorporate string theory and inflation, so we'll undoubtedly find a simpler version.

Laser Surgery Can Cut Flesh With Micro-explosions

Laser Surgery Can Cut Flesh With Micro-explosions Or With Burning

Lasers are at the cutting edge of surgery. From cosmetic to brain surgery, intense beams of coherent light are gradually replacing the steel scalpel for many procedures.

Despite this increasing popularity, there is still a lot that scientists do not know about the ways in which laser light interacts with living tissue. Now, some of these basic questions have been answered in the first investigation of how ultraviolet lasers -- similar to those used in LASIK eye surgery -- cut living tissues.

The effect that powerful lasers have on actual flesh varies both with the wavelength, or color, of the light and the duration of the pulses that they produce. The specific wavelengths of light that are absorbed by, reflected from or pass through different types of tissue can vary substantially. Therefore, different types of lasers work best in different medical procedures.

For lasers with pulse lengths of a millionth of a second or less, there are two basic cutting regimes:

Mid-infrared lasers with long wavelengths cut by burning. That is, they heat up the tissue to the point where the chemical bonds holding it together break down. Because they automatically cauterize the cuts that they make, infrared lasers are used frequently for surgery in areas where there is a lot of bleeding.

Shorter wavelength lasers in the near-infrared, visible and ultraviolet range cut by an entirely different mechanism. They create a series of micro-explosions that break the molecules apart. During each laser pulse, high-intensity light at the laser focus creates an electrically-charged gas known as a plasma. At the end of each laser pulse, the plasma collapses and the energy released produces the micro-explosions. As a result, these lasers -- particularly the ultraviolet ones -- can cut more precisely and produce less collateral damage than mid-infrared lasers. That is why they are being used for eye surgery, delicate brain surgery and microsurgery.

"This is the first study that looks at the plasma dynamics of ultraviolet lasers in living tissue," says Shane Hutson, assistant professor of physics at Vanderbilt University who conducted the research with post-doctoral student Xiaoyan Ma. "The subject has been extensively studied in water and, because biological systems are overwhelmingly water by weight, you would expect it to behave in the same fashion. However, we found a surprising number of differences."

One such difference involves the elasticity, or stretchiness, of tissue. By stretching and absorbing energy, the biological matrix constrains the growth of the micro-explosions. As a result, the explosions tend to be considerably smaller than they are in water. This reduces the damage that the laser beam causes while cutting flesh. This effect had been predicted, but the researchers found that it is considerably larger than expected.

Another surprising difference involves the origination of the individual plasma "bubbles." All it takes to seed such a bubble is a few free electrons. These electrons pick up energy from the laser beam and start a cascade process that produces a bubble that grows until it contains millions of quadrillions of free electrons. Subsequent collapse of this plasma bubble causes a micro-explosion. In pure water, it is very difficult to get those first few electrons. Water molecules have to absorb several light photons at once before they will release any electrons. So a high-powered beam is required.

"But in a biological system there is a ubiquitous molecule, called NADH, that cells use to donate and absorb electrons. It turns out that this molecule absorbs photons at near ultraviolet wavelengths. So it produces seed electrons when exposed to ultraviolet laser light at very low intensities," says Hutson. This means that in tissue containing significant amounts of NADH, ultraviolet lasers don't need as much power to cut effectively as people have thought.

The cornea in the eye is an example of tissue that has very little NADH. As a result, it responds to an ultraviolet laser beam more like water than skin or other kinds of tissue, according to the researcher.

"Now that we have a better sense of how tissue properties affect the laser ablation process, we can do a better job of predicting how the laser will work with new types of tissue,"

Technorati : Flesh, Laser, Micro-explosions, Surgery

Simulate Life And Death In The Universe

Astronomers Simulate Life And Death In The Universe

Stars always evolve in the universe in large groups, known as clusters. Astronomers distinguish these formations by their age and size. The question of how star clusters are created from interstellar gas clouds and why they then develop in different ways has now been answered by researchers at the Argelander Institute for Astronomy at the University of Bonn with the aid of computer simulations. The scientists have solved -- at least at a theoretical level -- one of the oldest astronomical puzzles, namely the question of whether star clusters differ in their internal structure.

Astronomical observations have shown that all stars are formed in star clusters. Astronomers distinguish between, on the one hand, small and, by astronomical standards, young star clusters ranging in number from several hundred to several thousand stars and, on the other, large high-density globular star clusters consisting of as many as ten million tightly packed stars which are as old as the universe. No one knows how many star clusters there might be of each type, because scientists have not previously managed to fully compute the physical processes behind their genesis.

Stars and star clusters are formed as interstellar gas clouds collapse. Within these increasingly dense clouds, individual "lumps" emerge which, under their own gravitational pull, draw ever closer together and finally become stars. Similar to our "solar wind", the stars send out strong streams of charged particles. These "winds" literally sweep out the remaining gas from the cloud. What remains is a cluster that gradually disintegrates until its component stars can move freely in the interstellar space of the Milky Way.

Scientists believe that our own sun arose within a small star cluster which disintegrated in the course of its development. "Otherwise our planetary system would probably have been destroyed by a star moving close by," says Professor Dr. Pavel Kroupa of the Argelander Institute for Astronomy at Bonn University. In order to achieve a better understanding of the birth and death of stellar aggregations Professor Kroupa and Dr. Holger Baumgardt have developed a computer programme that simulates the influence of the gases remaining in a cluster on the paths taken by stars.

Heavy star clusters live longer

The main focus of this research has been on the question of what the initial conditions must look like if a new-born star cluster is to survive for a long time. The Bonn astronomers discovered that clusters below a certain size are very easily destroyed by the radiation of their component stars. Heavy star clusters, on the other hand, enjoy significantly better "survival chances".

For astronomers, another important insight from this work is that both light and heavy star clusters do have the same origins. As Professor Kroupa explains, "It seems that when the universe was born there were not only globular clusters but also countless mini star clusters. A challenge now for astrophysics is to find their remains." The computations in Bonn have paved the way for this search by providing some valuable theoretical pointers.

The Argelander Institute has recently been equipped with five "GRAPE Computers", which operate at speeds 1,000 times higher than normal PCs. They are being deployed not only in research but also for research-related teaching: "Thanks to the GRAPE facilities, our students and junior academics are learning to exploit the power of supercomputers and the software developed specially for them." The Argelander Institute is regarded world-wide as a Mecca for the computation of stellar processes. Despite their enormous calculating capacity, the machines require several weeks to complete the simulation.

New Superlensing Technique Brings Everything into Focus

Ultratight focusing over very short distances beats the best lenses; the discovery could bring the nanoworld up close and into focus

Light cannot be focused on anything smaller than its wavelength-or so says more than a century of physics wisdom. But a new study now shows that it is possible, if light is focused extremely close to a very special kind of lens.

The traditional limit on the resolution of light microscopes, which depends on the sharpness of focusing, is a typical wavelength of visible light (around 500 nanometers). This limitation inspired the invention of the electron microscope for viewing smaller objects like viruses that are only 10 to 300 nanometers in size. But scientists have discovered that placing a special pattern of circles in front of a laser enables them to focus its beam down to 50 nanometers, tiny enough to illuminate viruses and nanoparticles.

To achieve this, scientists draw opaque concentric circles on a transparent plate with much shorter spacing than the wavelength of light, and vary the line thickness so that the circles are far apart at the center but practically overlap near the edge. This design ensures that light transmitted through the plate is brightest at the core and dimmer around the edges.

"This construction is a way to convert traveling waves into evanescent waves," says Roberto Merlin, a physicist at the University of Michigan at Ann Arbor and author of the study published today in the online journal Science Express. Unlike ordinary light waves (such as sunlight), which can travel forever, evanescent waves traverse only very short distances before dying out. Whereas most of the light shining on such a plate is reflected back, a portion of the light leaks out the other side in the form of evanescent waves. If these waves, which have slipped through the different slits between the circles, can blend before disappearing, they form a single bright spot much smaller than the wavelength. The plate effectively acts like a "superlens", and the focal length or distance between the lens and the spot is nearly the same as that between the plate's bright center and dim edge; the size of the spot is fixed by the spacing between the circles.

With current nanofabrication technology, scientists say, it is not unreasonable to imagine circles spaced 50 nanometers apart giving a comparable spot size, which is about 10 times smaller than what conventional lenses can achieve. The rub, however, is that the smaller the spot, the faster it fades away from the plate. For instance, the intensity of the spot from a circle spacing of 50 nanometers would halve every 5.5 nanometers away from the plate, so anything that needs lighting would have to be extremely close to it. Positioning with such nanometer-scale precision is well within present technological acumen, and is routinely used in other microscopy techniques like scanning tunneling microscopy.

There are currently other ongoing investigations of so-called superlensing schemes, but researchers say this technique may be more tolerant to variations in the color of light used. This is important because scientists would want to use the brightest lasers available (usually pulsed lasers spanning a range of colors) due to the attenuation in intensity of the focused spot.

Merlin and his U.M. collaborator Anthony Grbic, an electrical engineer, are wrapping up the construction of a focusing system for microwaves based on the above theory; they say they're confident it will focus 30-centimeter wavelength microwaves onto a spot 1.5 centimeters wide. If a similar system could be built for light, it would enable the study of viral and nanoparticle structures by focusing light on them and detecting their scattered light. Other potential applications include larger capacity CDs and DVDs, which are currently limited by the size of the laser dot used to encode individual bits.

Technorati : Focus, New, Superlensingk, Technique

Astronauts Enter Harmony For First Time

ESA astronaut Paolo Nespoli and Expedition 16 Commander Peggy Whitson were the first astronauts to enter the newest addition to the International Space Station after opening Harmony's hatch at 14:24 CEST (12:24 UT) . "It's a pleasure to be here in this very beautiful piece of hardware," said Nespoli, during a short ceremony to mark the occasion. "I would like to thank everybody who worked hard in making this possible and allowing the Space Station to be built even further."

The second of three nodes, or connecting modules, for the Station, the arrival of Harmony paves the way for the addition of the European Columbus laboratory and the Japanese Kibo laboratory during upcoming Shuttle missions.

Carried into space inside Space Shuttle Discovery's cargo bay, the Italian-built Harmony is the first addition to the Space Station's pressurized volume for six years. The module adds an extra 34 m3 of living and working space to the orbital outpost.

First European module

The Harmony module, also known as Node 2, was installed in its temporary location on the port side of the Node 1 module during a six and a half hour spacewalk yesterday.

Once Discovery has undocked at the end of the STS-120 mission, the Expedition 16 crew will relocate Harmony to its permanent location at the forward end of the US Destiny lab.

Built in Europe, with Thales Alenia Spazio as prime contractor, thanks to a barter agreement between NASA and ESA including the Shuttle launch of the European laboratory Columbus, Harmony is the first European module permanently attached to the ISS.

Technorati : Astronauts, Enter, First, Harmony, Time

Public Health Risks from Climate Change are Key Concern Commentsby Professor Jonathan A. Patz

Message not to be Lost in Debate: Public Health Risks from Climate Change are Key Concern - Oct 26, 2007

Comment by Professor Jonathan A. Patz, University of Wisconsin, Madison

With all the attention over the Bush Administration's mishandling of senate testimony by CDC Director, Julie Gerberding, I fear that the central clear message is being overshadowed by the (albeit errant) procedural aspects of the situation. Serving as a Lead Author for the United Nations Intergovernmental Panel on Climate Change (IPCC) reports of 1995, 1998, 2001, and 2007 (and Health Co-Chair for the US National Assessment on Climate Change) I can reaffirm that the original CDC testimony was scientifically accurate and consistent with IPCC findings.

But more than enough press has focused on the handling of the testimony, and not enough on the important messages that Congress and the American public need to know about Global Warming. These are:

1) Our public's health is indeed at risk from the effects of climate change acting via numerous hazardous exposure pathways, including: more intense and frequent heat waves and storms; ozone smog pollution and increased pollen allergens; insect-borne and water-borne infectious diseases; and disease risks from outside the US - afterall, we live in a globalized world. Some benefits from reduced cold and some decline in certain diseases can be expected, however, the scientific assessments have consistently found that, on balance, the health risks outweigh the benefits.

2) The Department of Health and Human Services, that includes CDC and NIH, are responsible for protecting the health of the American public. To the extent that extremes of climate can have broad population-wide impacts, neither the CDC nor NIH have directed adequate resources to address climate change, and to date, funding has been minimal compared to the size of the health threat.

3) There are potentially large opportunities and co-benefits in addressing the health risks of global warming. Certainly, our public health infrastructure must be strengthened, e.g, fortify water supply systems, heat and storm early warning and response programs, and enhance disease modeling and surveillance. However, energy policy now becomes one and the same as public health policy. Reducing fossil fuel burning will: (a) further reduce air pollution, (b) improve our fitness (e.g., if urban transportation planning allows for more Americans to travel by foot or bike, than by car), and (c) lessen potential greenhouse warming.

In short, the challenges posed by climate change urgently demand improving public health infrastructure AND energy conservation / urban planning policies - as such, climate change can present both enormous health risks and opportunities. But without funding from Congress to address climate change, CDC has its hands tied.

Web Resources:

Global & Sustainable Environmental Health at the University of Wisconsin, Madison

http://www.sage.wisc.edu/pages/health.html

Website for Middle School teachers and students, and the general public

http://www.ecohealth101.org

Technorati : Climate Change, public health

Space Challenger : Astronomers Simulate Life And Death In The Universe

Stars always evolve in the universe in large groups, known as clusters. Astronomers distinguish these formations by their age and size. The question of how star clusters are created from interstellar gas clouds and why they then develop in different ways has now been answered by researchers at the Argelander Institute for Astronomy at the University of Bonn with the aid of computer simulations. The scientists have solved -- at least at a theoretical level -- one of the oldest astronomical puzzles, namely the question of whether star clusters differ in their internal structure.

This image is of the spidery filaments and newborn stars of the Tarantula Nebula, a rich star-forming region also known as 30 Doradus. This cloud of glowing dust and gas is located in the Large Magellanic Cloud, the nearest galaxy to our own Milky Way, and is visible primarily from the Southern Hemisphere. This image of an interstellar cauldron provides a snapshot of the complex physical processes and chemistry that govern the birth - and death - of stars. (Credit: NASA Jet Propulsion Laboratory (NASA-JPL))

This image is of the spidery filaments and newborn stars of the Tarantula Nebula, a rich star-forming region also known as 30 Doradus. This cloud of glowing dust and gas is located in the Large Magellanic Cloud, the nearest galaxy to our own Milky Way, and is visible primarily from the Southern Hemisphere. This image of an interstellar cauldron provides a snapshot of the complex physical processes and chemistry that govern the birth - and death - of stars. (Credit: NASA Jet Propulsion Laboratory (NASA-JPL))Astronomical observations have shown that all stars are formed in star clusters. Astronomers distinguish between, on the one hand, small and, by astronomical standards, young star clusters ranging in number from several hundred to several thousand stars and, on the other, large high-density globular star clusters consisting of as many as ten million tightly packed stars which are as old as the universe. No one knows how many star clusters there might be of each type, because scientists have not previously managed to fully compute the physical processes behind their genesis.

Stars and star clusters are formed as interstellar gas clouds collapse. Within these increasingly dense clouds, individual "lumps" emerge which, under their own gravitational pull, draw ever closer together and finally become stars. Similar to our "solar wind", the stars send out strong streams of charged particles. These "winds" literally sweep out the remaining gas from the cloud. What remains is a cluster that gradually disintegrates until its component stars can move freely in the interstellar space of the Milky Way.

Scientists believe that our own sun arose within a small star cluster which disintegrated in the course of its development. "Otherwise our planetary system would probably have been destroyed by a star moving close by," says Professor Dr. Pavel Kroupa of the Argelander Institute for Astronomy at Bonn University. In order to achieve a better understanding of the birth and death of stellar aggregations Professor Kroupa and Dr. Holger Baumgardt have developed a computer programme that simulates the influence of the gases remaining in a cluster on the paths taken by stars.

Heavy star clusters live longer

The main focus of this research has been on the question of what the initial conditions must look like if a new-born star cluster is to survive for a long time. The Bonn astronomers discovered that clusters below a certain size are very easily destroyed by the radiation of their component stars. Heavy star clusters, on the other hand, enjoy significantly better "survival chances".

For astronomers, another important insight from this work is that both light and heavy star clusters do have the same origins. As Professor Kroupa explains, "It seems that when the universe was born there were not only globular clusters but also countless mini star clusters. A challenge now for astrophysics is to find their remains." The computations in Bonn have paved the way for this search by providing some valuable theoretical pointers.

The Argelander Institute has recently been equipped with five "GRAPE Computers", which operate at speeds 1,000 times higher than normal PCs. They are being deployed not only in research but also for research-related teaching: "Thanks to the GRAPE facilities, our students and junior academics are learning to exploit the power of supercomputers and the software developed specially for them." The Argelander Institute is regarded world-wide as a Mecca for the computation of stellar processes. Despite their enormous calculating capacity, the machines require several weeks to complete the simulation.

The findings have now been published in the science journal "Monthly Notices of the Royal Astronomical Society" (MNRAS 380, 1589).

Technorati : Astronomers, Space Challenger

Quantum Cascade Laser Nanoantenna Created :with a wide-range of potential applications

In a major feat of nanotechnology engineering researchers from Harvard University have demonstrated a laser with a wide-range of potential applications in chemistry, biology and medicine. Called a quantum cascade (QC) laser nanoantenna, the device is capable of resolving the chemical composition of samples, such as the interior of a cell, with unprecedented detail,

Spearheaded by graduate students Nanfang Yu, Ertugrul Cubukcu, and Federico Capasso, Robert L. Wallace Professor of Applied Physics, all of Harvard's School of Engineering and Applied Sciences, the findings will be published as a cover feature of the October 22 issue of Applied Physics Letters. The researchers have also filed for U.S. patents covering this new class of photonic devices.

The laser's design consists of two gold rods separated by a nanometer gap (a device known as an optical antenna) built on the facet of a quantum cascade laser, which emits invisible light in the region of the spectrum where most molecules have their tell tale absorption fingerprints. The nanoantenna creates a light spot of nanometric size about fifty to hundred times smaller than the laser wavelength; the spot can be scanned across a specimen to provide chemical images of the surface with superior spatial resolution.

"There's currently a major push to develop powerful tabletop microscopes with spatial resolution much smaller than the wavelength that can provide images of materials, and in particular biological specimens, with chemical information on a nanometric scale," says Federico Capasso.

While infrared microscopes, based on the detection of molecular absorption fingerprints, are commercially available and widely used to map the chemical composition of materials, their spatial resolution is limited by the range of available light sources and optics to well above the wavelength. Likewise the so-called near field infrared microscopes, which rely on an ultra sharp metallic tip scanned across the sample surface at nanometric distances, can provide ultrahigh spatial resolution but applications are so far strongly limited by the use of bulky lasers with very limited tunability and wavelength coverage.

"By combining Quantum Cascade Lasers with optical antenna nanotechnology we have created for the first time an extremely compact device that will enable the realization of new ultrahigh spatial resolution microscopes for chemical imaging on a nanometric scale of a wide range of materials and biological specimens," says Capasso.

Quantum cascade (QC) lasers were invented and first demonstrated by Capasso and his group at Bell Labs in 1994. These compact millimeter length semiconductor lasers, which are now commercially available, are made by stacking nanometer thick layers of semiconductor materials on top of each other. By varying the thickness of the layers one can select the wavelength of the QC laser across essentially the entire infrared spectrum where molecules absorb, thus custom designing it for a specific application.

In addition by suitable design the wavelength of a particular QCL can be made widely tunable. The range of applications of QC laser based chemical sensors is very broad, including pollution monitoring, chemical sensing, medical diagnostics such as breath analysis, and homeland security.

The teams co-authors are Kenneth Crozier, Assistant Professor of Electrical Engineering, and research associates Mikhail Belkin and Laurent Diehl, all of Harvard's School of Engineering and Applied Sciences; David Bour, Scott Corzine, and Gloria Höfler, all formerly with Agilent Technologies. The research was supported by the Air Force Office of Scientific Research and the National Science Foundation. The authors also acknowledge the support of two Harvard-based centers, the Nanoscale Science and Engineering Center and the Center for Nanoscale Systems, a member of the National Nanotechnology Infrastructure Network.

Technorati : Nanotechnology, Quantum Cascade

Brain acts diffrent For Creative And Noncreative Thinkers

How brain acts for crealtivity ?

How brain acts for crealtivity ?

Why do some people solve problems more creatively than others? Are people who think creatively different from those who tend to think in a more methodical fashion?

These questions are part of a long-standing debate, with some researchers arguing that what we call "creative thought" and "noncreative thought" are not basically different. If this is the case, then people who are thought of as creative do not really think in a fundamentally different way from those who are thought of as noncreative. On the other side of this debate, some researchers have argued that creative thought is fundamentally different from other forms of thought. If this is true, then those who tend to think creatively really are somehow different.

A new study led by John Kounios, professor of Psychology at Drexel University and Mark Jung-Beeman of Northwestern University answers these questions by comparing the brain activity of creative and noncreative problem solvers. The study, published in the journal Neuropsychologia, reveals a distinct pattern of brain activity, even at rest, in people who tend to solve problems with a sudden creative insight -- an "Aha! Moment" - compared to people who tend to solve problems more methodically.

At the beginning of the study, participants relaxed quietly for seven minutes while their electroencephalograms (EEGs) were recorded to show their brain activity. The participants were not given any task to perform and were told they could think about whatever they wanted to think about. Later, they were asked to solve a series of anagrams - scrambled letters that can be rearranged to form words [MPXAELE = EXAMPLE]. These can be solved by deliberately and methodically trying out different letter combinations, or they can be solved with a sudden insight or "Aha!" in which the solution pops into awareness. After each successful solution, participants indicated in which way the solution had come to them.

The participants were then divided into two groups - those who reported solving the problems mostly by sudden insight, and those who reported solving the problems more methodically - and resting-state brain activity for these groups was compared. As predicted, the two groups displayed strikingly different patterns of brain activity during the resting period at the beginning of the experiment - before they knew that they would have to solve problems or even knew what the study was about.

One difference was that the creative solvers exhibited greater activity in several regions of the right hemisphere. Previous research has suggested that the right hemisphere of the brain plays a special role in solving problems with creative insight, likely due to right-hemisphere involvement in the processing of loose or "remote" associations between the elements of a problem, which is understood to be an important component of creative thought. The current study shows that greater right-hemisphere activity occurs even during a "resting" state in those with a tendency to solve problems by creative insight. This finding suggests that even the spontaneous thought of creative individuals, such as in their daydreams, contains more remote associations.

Second, creative and methodical solvers exhibited different activity in areas of the brain that process visual information. The pattern of "alpha" and "beta" brainwaves in creative solvers was consistent with diffuse rather than focused visual attention. This may allow creative individuals to broadly sample the environment for experiences that can trigger remote associations to produce an Aha! Moment.

For example, a glimpse of an advertisement on a billboard or a word spoken in an overheard conversation could spark an association that leads to a solution. In contrast, the more focused attention of methodical solvers reduces their distractibility, allowing them to effectively solve problems for which the solution strategy is already known, as would be the case for balancing a checkbook or baking a cake using a known recipe.

Thus, the new study shows that basic differences in brain activity between creative and methodical problem solvers exist and are evident even when these individuals are not working on a problem. According to Kounios, "Problem solving, whether creative or methodical, doesn't begin from scratch when a person starts to work on a problem. His or her pre-existing brain-state biases a person to use a creative or a methodical strategy."

In addition to contributing to current knowledge about the neural basis of creativity, this study suggests the possible development of new brain imaging techniques for assessing potential for creative thought, and for assessing the effectiveness of methods for training individuals to think creatively.

Journal reference: Kounios, J., Fleck, J.I., Green, D.L., Payne, L., Stevenson, J.L., Bowden, E.M., & Jung-Beeman, M. The origins of insight in resting-state brain activity, Neuropsychologia (2007), doi:10.1016/j.neuropsychologia.2007.07.013

See also:

Jung-Beeman, M., Bowden, E.M., Haberman, J., Frymiare, J.L., Arambel-Liu, S., Greenblatt, R., Reber, P.J., & Kounios, J. (2004). Neural activity when people solve verbal problems with insight. PLoS Biology, 2, 500-510.

Kounios, J., Frymiare, J.L., Bowden, E.M., Fleck, J.I., Subramaniam, K., Parrish, T.B., & Jung-Beeman, M.J. (2006). The prepared mind: Neural activity prior to problem presentation predicts subsequent solution by sudden insight. Psychological Science, 17, 882-890.

sourced Drexel University. www.sciencedaily.com

Technorati : Brain science, creative, thinker

Alternative fuel : If Corn Is Biofuels King !!!

Researcher is finding the source of energy - fuel of Alternative way .....

Researcher is finding the source of energy - fuel of Alternative way .....

When University of Illinois crop scientist Fred Below began growing tropical maize, the form of corn grown in the tropics, he was looking for novel genes for the utilization of nitrogen fertilizer and was hoping to discover information that could be useful to American corn producers.

Now, however, it appears that maize itself may prove to be the ultimate U.S. biofuels crop.

Early research results show that tropical maize, when grown in the Midwest, requires few crop inputs such as nitrogen fertilizer, chiefly because it does not produce any ears. It also is easier for farmers to integrate into their current operations than some other dedicated energy crops because it can be easily rotated with corn or soybeans, and can be planted, cultivated and harvested with the same equipment U.S. farmers already have. Finally, tropical maize stalks are believed to require less processing than corn grain, corn stover, switchgrass, Miscanthus giganteus and the scores of other plants now being studied for biofuel production.

What it does produce, straight from the field with no processing, is 25 percent or more sugar -- mostly sucrose, fructose and glucose.

"Corn is a short-day plant, so when we grow tropical maize here in the Midwest the long summer days delay flowering, which causes the plant to grow very tall and produce few or no ears," says Below. Without ears, these plants concentrate sugars in their stalks, he adds. Those sugars could have a dramatic affect on Midwestern production of ethanol and other biofuels.

According to Below, "Midwestern-grown tropical maize easily grows 14 or 15 feet tall compared to the 7-1/2 feet height that is average for conventional hybrid corn. It is all in these tall stalks," Below explains. "In our early trials, we are finding that these plants build up to a level of 25 percent or higher of sugar in their stalks.

This differs from conventional corn and other crops being grown for biofuels in that the starch found in corn grain and the cellulose in switchgrass, corn stover and other biofuel crops must be treated with enzymes to convert them into sugars that can be then fermented into alcohols such as ethanol.

Storing simple sugars also is more cost-effective for the plant, because it takes a lot of energy to make the complex starches, proteins, and oils present in corn grain. This energy savings per plant could result in more total energy per acre with topical maize, since it produces no grain.

"In terms of biofuel production, tropical maize could be considered the 'Sugarcane of the Midwest',"Below said. "The tropical maize we're growing here at the University of Illinois is very lush, very tall, and very full of sugar."

He added that his early trials also show that tropical maize requires much less nitrogen fertilizer than conventional corn, and that the stalks actually accumulate more sugar when less nitrogen is available. Nitrogen fertilizer is one of major costs of growing corn.

He explained that sugarcane used in Brazil to make ethanol is desirable for the same reason: it produces lots of sugar without a high requirement for nitrogen fertilizer, and this sugar can be fermented to alcohol without the middle steps required by high-starch and cellulosic crops. But sugarcane canít be grown in the Midwest.

The tall stalks of tropical maize are so full of sugar that producers growing it for biofuel production will be able to supply a raw material at least one step closer to being turned into fuel than are ears of corn.

"And growing tropical maize doesn't break the farmers' rotation. You can grow tropical maize for one year and then go back to conventional corn or soybeans in subsequent years," Below said. "Miscanthus, on the other hand, is thought to need a three-year growth cycle between initial planting and harvest and then your land is in Miscanthus. To return to planting corn or soybean necessitates removing the Miscanthus rhizomes.

Below is studying topical maize along with doctoral candidate Mike Vincent and postdoctoral research associate Matias Ruffo, and in conjunction with U of I Associate Professor Stephen Moose. This latest discovery of high sugar yields from tropical maize became apparent through cooperative work between Below and Moose to characterize genetic variation in response to nitrogen fertilizers.

Currently supported by the National Science Foundation, these studies are a key element to developing maize hybrids with improved nitrogen use efficiency. Both Below and Moose are members of Illinois Maize Breeding and Genetics Laboratory, which has a long history of conducting research that identifies new uses for the maize crop.

Moose now directs one of the longest-running plant genetics experiments in the world, in which more than a century of selective breeding has been applied to alter carbon and nitrogen accumulation in the maize plant. Continued collaboration between Below and Moose will investigate whether materials from these long term selection experiments will further enhance sugar yields from tropical maize.

Technorati : Alternative fuel, biofuel, corn, research

Research : Dark Matter

We believe that most of the matter in the universe is dark, i.e. cannot be detected from the light which it emits (or fails to emit). This is "stuff" which cannot be seen directly -- so what makes us think that it exists at all? Its presence is inferred indirectly from the motions of astronomical objects, specifically stellar, galactic, and galaxy cluster/supercluster observations. It is also required in order to enable gravity to amplify the small fluctuations in the Cosmic Microwave Background enough to form the large-scale structures that we see in the universe today.