New research at MIT has revealed for the first time the role of bone's atomistic structure in a toughening mechanism that incorporates two theories previously proposed by researchers eager to understand the secret behind the material's lightweight strength.

Past experimental studies have revealed a number of different mechanisms at different scales of focus, rather than a single theory. The combination mechanism uncovered by the MIT researchers allows for the sacrifice of a small piece of the bone in order to save the whole, helps explain why bone tolerates small cracks, and seems to be adapted specifically to accommodate bone's need for continuous rebuilding from the inside out.

"The newly discovered molecular mechanism unifies controversial attempts of explaining sources of the toughness of bone, because it illustrates that two of the earlier explanations play key roles at the atomistic scale," said the study's author, Esther and Harold E. Edgerton Professor Markus Buehler of MIT's Department of Civil and Environmental Engineering.

"It's quite possible that each scale of bone--from the molecular on up--has its own toughening mechanism," said Buehler. "This hierarchical distribution of toughening may be critical to explaining the intriguing properties of bone and laying the foundation for new materials design that includes the nanostructure as a specific design variable."

Unlike synthetic building materials, which tend to be homogenous throughout, bone is heterogeneous living tissue whose cells undergo constant change. Scientists have classified bone's basic structure into a hierarchy of seven levels of increasing scale. Level 1 bone consists of bone's two primary components: chalk-like hydroxyapatite and collagen fibrils, which are strands of tough, chewy proteins. Level 2 bone comprises a merging of these two into mineralized collagen fibrils that are much stronger than the collagen fibrils alone. The hierarchical structure continues in this way through increasingly larger combinations of the two basic materials until reaching level 7, or whole bone.

Buehler scaled down his model to the atomistic level, to see how the molecules fit together--and equally important for materials scientists and engineers--how and when they break apart. More precisely, he looked at how the chemical bonds within and between molecules respond to force. Last year, he analyzed for the first time the characteristic staggered molecular structure of collagen fibrils, the precursor to level 1 bone.

In his newer research, he studied the molecular structure of the mineralized collagen fibrils that make up level 2 bone, hoping to find the mechanism behind bone's strength, which is considerable for such a lightweight, porous material.

At the molecular level, the mineralized collagen fibrils are made up of strings of alternating collagen molecules and consistently sized hydroxyapatite crystals. These strings are "stacked" together in a staggered fashion such that the crystals appear in stair-step configurations. Weak bonds form between the crystals and molecules in the strings and between the strings.

When pressure is applied to the fabric-like fibrils, some of the weak bonds between the collagen molecules and crystals break, creating small gaps or stretched areas in the fibrils. This stretching spreads the pressure over a broader area, and in effect, protects other, stronger bonds within the collagen molecule itself, which might break outright if all the pressure were focused on them. The stretching also lets the tiny crystals shift position in response to the force, rather than shatter, which would be the likely response of a larger crystal.

Previously, some researchers suggested that the fundamental key to bone's toughness is the "molecular slip" mechanism that allows weak bonds to break and "stretch" the fabric without destroying it. Others have cited the characteristic length of bone's hydroxyapatite crystals (a few nanometers) as an explanation for bone's toughness; the crystals are too small to break easily.

At the atomistic scale, Buehler sees the interplay of both these mechanisms. This suggests that competing explanations may be correct; bone relies on different toughening mechanisms at different scales.

Buehler also discovered something very notable about bone's ability to tolerate gaps in the stretched fibril fabric. These gaps are of the same magnitude--several hundred micrometers--as the basic multicellular units or BMUs associated with bone's remodeling. BMUs are a combination of cells that work together like a small boring tool that eats away old bone at one end and replaces it at the other, forming small crack-like cavities in between as it works its way through the tissue.

Thus, the mechanism responsible for bone's strength at the molecular scale also explains how bone can remain so strong--even though it contains those many tiny cracks required for its renewal.

This could prove very useful information to civil engineers, who have always used materials like steel that gain strength through density. Nature, however, creates strength in bone by taking advantage of the gaps, which themselves are made possible by the material's hierarchical structure.

"Engineers typically over-dimension structures in order to make them robust. Nature creates robustness by hierarchical structures," said Buehler.

This work was funded by a National Science Foundation CAREER award and a grant from the Army Research Office.

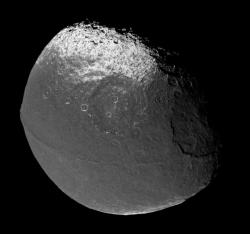

Photo / Donna Coveney MIT Professor Markus Buehler has helped reveal why bones are so tough. The object on the screen is a triple helical tropocollagen molecule, a fundamental building block of bone. Next to the molecule are nanosized hydroxyapatite chalk-like crystals. In his work he simulates the behavior of the composite of tropocollagen and hydroxyapatite during deformation. Enlarge image CONTACT Elizabeth A. Thomson RELATEDModel helps students visualize nanoscale problems - An educational experiment during IAP demonstrated that students can learn to apply sophisticated atomistic modeling techniques to traditional materials research in just a few classes, an advance that could dramatically change the way civil engineers learn to model the mechanical properties of materials. 4/2/2007 Markus Buehler - MIT Department of Civil and Environmental Engineering More: Biology More: Civil engineering More: Materials science |